Welcome to Fanghui Liu's Homepage!

I received my Bachelor’s degree in Automation from Harbin Institute of Technology in 2014 and my PhD degree from Shanghai Jiao Tong University (SJTU) in 2019. I subsequently held postdoctoral research positions at KU Leuven and EPFL from 2019 to 2023. After that, I have held faculty positions (or affiliated) at the University of Warwick, the Centre for Discrete Mathematics and its Applications, DIMAP, the Technical University of Munich (Global Visiting Professor), and Shanghai Jiao Tong University. I received AAAI’24 New Faculty Award, Rising Stars in AI Symposium (KAUST 2023), and several Excellent Doctoral Dissertation Awards at 2019.

Email: x@y with x=fanghui.liu, y=sjtu.edu.cn or warwick.ac.uk or epfl.ch

The website was updated at Dec. 22nd, 2025.

Research Interests

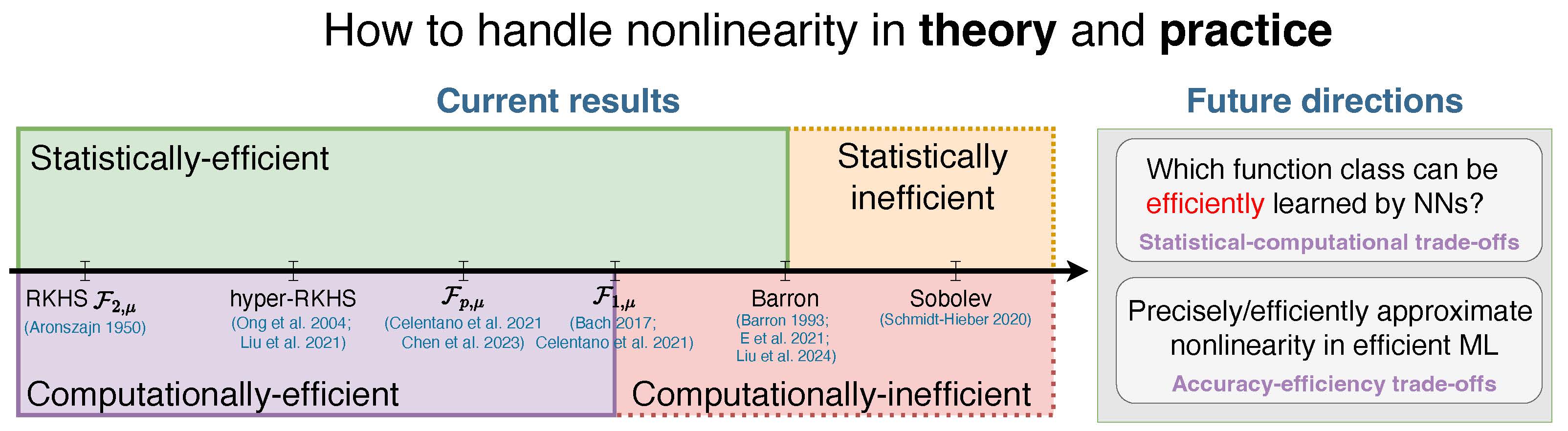

I'm generally interested in foundations of modern machine learning from the lens of learning efficiency, both theoretically and empirically. My research is always contributing to how to handle nonlinearity at a theoretical level and how to precisely and efficiently approximate nonlinearity at a practical level under theoretical guidelines, which is a longstanding question over science, technology and engineering.

My research (the past, ongoing and future) focuses on the following directions:

machine learning theory: what is the largest function space that can be learned by neural networks, both statistically and computationally efficiently (computational-statistical gaps)?

post-training in LLMs: expand the frontiers of empirical and theoretical knowledge on when and where to fine-tuneinference, and how much we can fine-tuneinference, precisely, efficiently, and robustly

My research is supported by grants from Royal Society (UK), Alan Turing Institute (UK), and DAAD (Germany).

We're organising IJCV Special Issue on Post-Training in Large Language Models for Computer Vision!