Welcome to Fanghui Liu (刘方辉)'s Homepage!

Fanghui Liu

Assistant Professor at Department of Computer Science, University of Warwick, UK

Faculty member of Centre for Discrete Mathematics and its Applications (DIMAP), Warwick

Email: x@y with x=fanghui.liu and y=warwick.ac.uk

[Google Scholar]

[OpenReview]

[homepage at Warwick]

[speaker bio]

at Zermatt, Switzerland (Aug. 2022)

About me

I'm currently an assistant professor at Department of Computer Science, a faculty member of Centre for Discrete Mathematics and its Applications (DIMAP), and an affiliated member of the Division of Theory and Foundations (FoCS), at the University of Warwick, UK. I am an ELLIS Member and IEEE Senior Member.

Here is my CV.

We're organising Warwick Foundation of AI seminar!

Research Interests

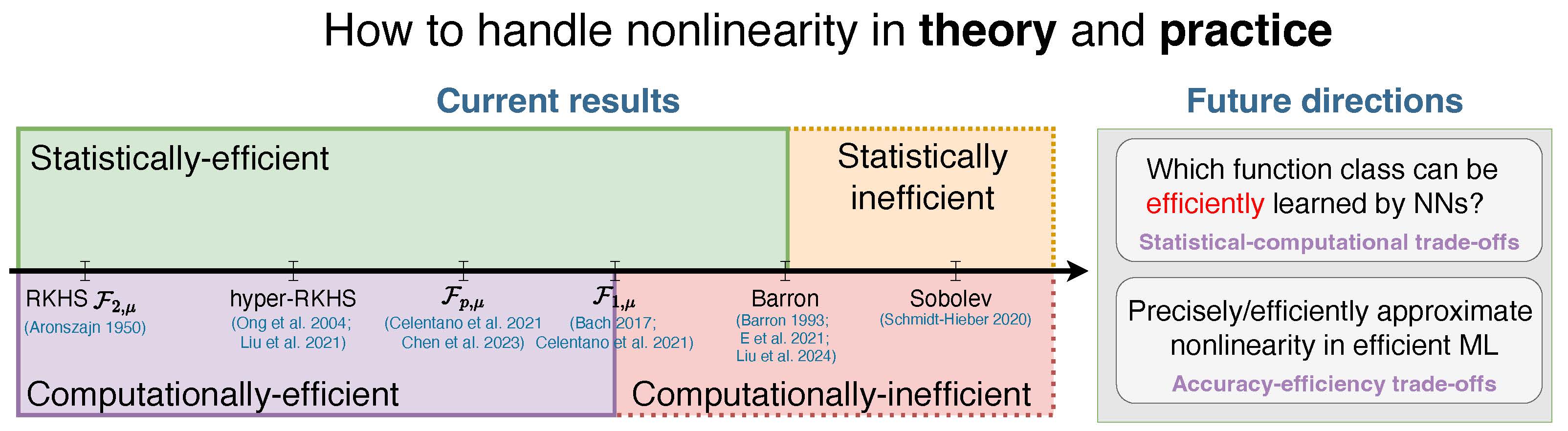

I'm generally interested in foundations of modern machine learning from the lens of learning efficiency, both theoretically and empirically. My research is always contributing to how to handle nonlinearity at a theoretical level and how to precisely and efficiently approximate nonlinearity at a practical level under theoretical guidelines, which is a longstanding question over science, technology and engineering.

My research (the past, ongoing and future) focuses on the following directions:

machine learning theory: what is the largest function space that can be learned by neural networks, both statistically and computationally efficiently (computational-statistical gaps)?

post-training in LLMs: expand the frontiers of empirical and theoretical knowledge on when and where to fine-tuneinference, and how much we can fine-tuneinference, precisely, efficiently, and robustly

My research is supported by grants from Royal Society (UK), Alan Turing Institute (UK), and DAAD (Germany).

Jobs

I am looking for motivated Ph.D. students for Fall 2026 to work with me on learning theory or principles of LLMs. See Open positions for details.

As our department requires a research proposal as part of the PhD application, I strongly encourage interested candidates to contact me in advance of the application deadline (check website for details). If you are a strong fit based on my evaluation, I will spend some time to support you in preparing a competitive proposal and application.

Due to a large number of requests, I, unfortunately, may not be able to reply to all the emails regarding PhD applications. However, I'll look at applicants that have sent me emails and contact you soon if your enquires are indeed inline with my research.

News

[2025-05] One paper on LoRA (from theory to practice) was accepted by ICML’25 as Oral. Congratulations to Yuanhe.

[2025-02] Attended 2025 ITA Workshop and visited UCLA.

[2025-01] One paper was accepted by ICLR’25. It's about how gradient descent balances features after weak recovery.

[2024-12] We organised one workshop Fine-Tuning in Modern Machine Learning: Principles and Scalability at NeurIPS 2024!

[2024-09] I will attend Mathematical Aspects of Learning Theory Workshop - 20 years later.

[2024-05] Two papers were accepted by ICML 2024: one is about high dimensional kernel methods under distribution shift; one is about adversarial attack on foundation models.

[2024-04] One paper was accepted by JMLR on the separation between kernel methods and neural networks from the perspective of function space.

[2023-04] We will give a tutorial entitled Scaling and Reliability Foundations in Machine Learning at 2024 IEEE International Symposium on Information Theory (ISIT) in Athens, Greece at July.

[2024-04] Awarded the DAAD AInet Fellowship, which is awarded to outstanding early career researchers. Topic: Safety and Security in AI.

[2024-03] The adversarially trained NAS benchmark (NAS-RobBench-201) in our ICLR24 paper was released! See [project website] for details.

[2024-01] Three papers were accepted by ICLR 2024: generalization of ResNets; robust NAS from benchmark to theory; local-linearity for catastrophic overfitting. I will be at Vienna. Feel free to chat.

[2023-12] Selected to give a talk at AAAI 2024 New Faculty Highlights.

[2023-09] Two papers were accepted by NeurIPS 2023: one is about global convergence of Transformers; the other one is how over-parameterization affects differential privacy. I will be at New Orleans (again and again). Feel free to chat.

[2023-06] Here is the slides of our CVPR 2023 tutorial.

[2023-06] Here is the slides of our ICASSP 2023 tutorial.

[2023-04] Two papers were accepted by ICML 2023: one is about function approximation in online RL; the other one is related to benign overfitting. I will be at Hawaii! Feel free to chat.

[2023-04] Selected as a Notable Reviewer for ICLR 2023.

[2023-02] We will give a tutorial entitled Deep learning theory for computer vision at IEEE CVPR 2023 in Vancouver, Canada.

[2023-02] I will attend the Rising Stars in AI Symposium 2023 at KAUST in Saudi Arabia (Feb. 19-21).

[2022-12] We will give a tutorial entitled Neural networks: the good, the bad, the ugly at IEEE ICASSP 2023 in the Greek island of Rhodes.

[2022-09] Six papers were accepted by NeurIPS 2022.

[2021-10] One paper on double descent of RFF with SGD was posted on arXiv.

[2021-10] One paper was accepted by IEEE Transactions on Pattern Analysis and Machine Intelligence.

[2021-07] One paper was accepted by IEEE Transactions on Pattern Analysis and Machine Intelligence.

[2021-06] One paper was accepted by Journal of Machine Learning Research.

[2021-02] One paper was accepted by Machine Learning.

[2021-01] Two papers were accepted by AISTATS 2021.

[2020-10] One paper was accepted by Journal of Machine Learning Research.